Towards Comparing Learned Classifiers

Abstract

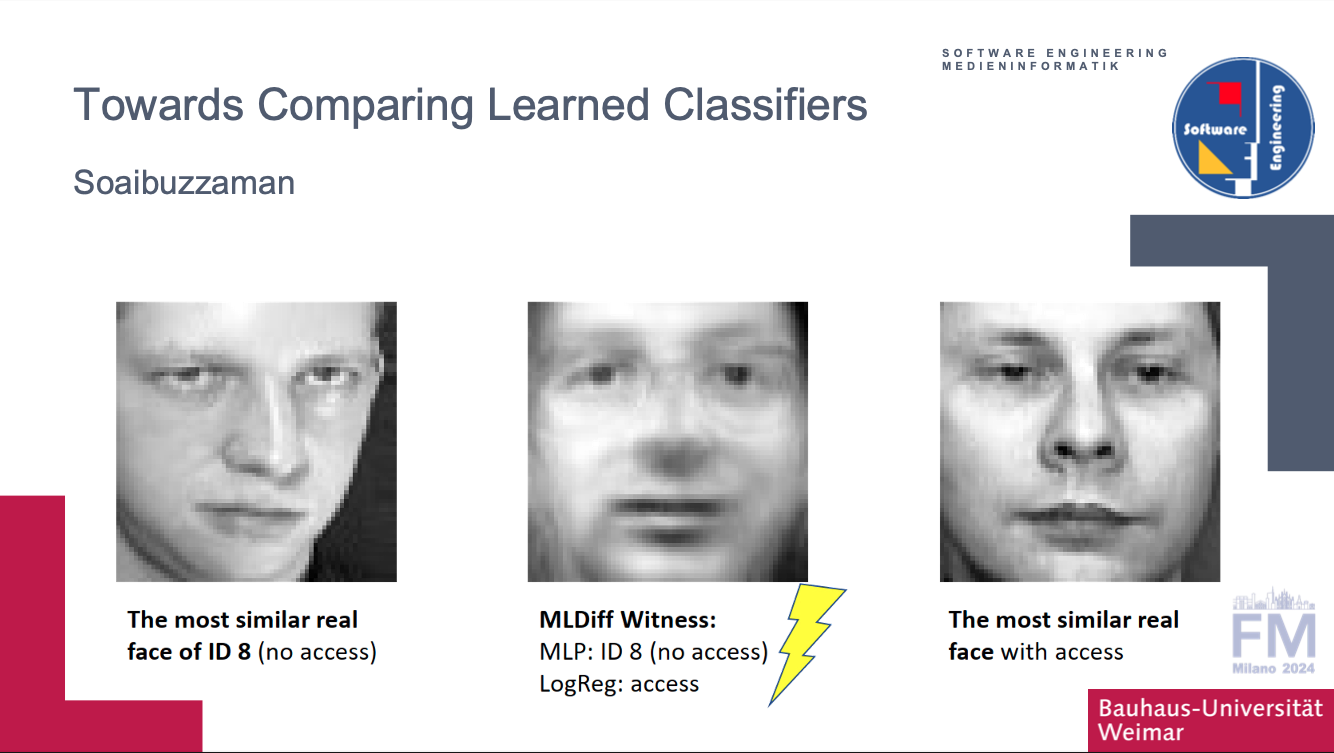

Machine learning for classification has seen numerous applications to complex, real-world tasks. Learned classifiers have become important artifacts of software systems that, like code, require careful analyses, maintenance, and evolution. Existing work on the formal verification of learned classifiers has mainly focused on the properties of individual classifiers, e.g., safety, fairness, or robustness, but not on analyzing the commonalities and differences of multiple classifiers. This PhD project envisions MLDiff, a novel approach to comparing learned classifiers where one is an alternative or variant of another. MLDiff will leverage an encoding to SMT and can discover differences not (yet) seen in available datasets. We outline the challenges of MLDiff and present an early prototype.